Structured Light Calibration

There is a rich body of _research surrounding camera calibration. The process is usually broken up into geometric and radiometric / photometric calibration.

Projectors can essentially be thought of the inverse of a camera. Instead caputuring a scene projected onto an image plane as a camera does, projectors project an image plane onto an environment.

Due to this similarity, projector calibration is a topic of interest in the interactive computer graphics field.

In a projection mapping context, usually what is meant by "projector calibration" is "placing video content where it is supposed to go in a physical environment".

There are many strategies for performing camera calibration, with structured light being a popular one which is implemented in several applications such as MadMapper and disguise.

In this _research, Mike Walczyk and I explored the structured light "projector calibration" process based on the academic paper which first described the technique.

To explain the structured light process it helps to imagine there is a room (like in the figure below) and for some reason you want to project an image where two walls meet.

Example room

In the above picture that would be a corner of the room, for instance the corner located at the top of the above image.

For illustration purposes let's say the image you want to project is this simple checkerboard image (which is overlaid with the letter F to show distortion):

Pattern to project

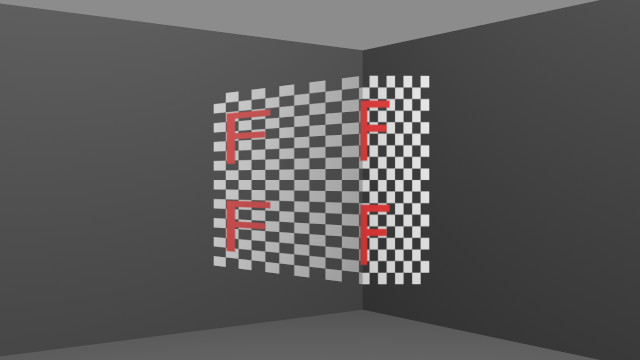

If you project that image directly onto the corner, the image will show obvious distortion.

Pattern projected with distortion

Using structured light (in this case that means the simultaneous projection and photographing of different black and white images) the distortion can be fixed.

Example structured light patterns

A structured light process allows for the acquisition of a complete mapping (which the source paper refers to as $R$) from the camera pixels to the projector pixels.

This can be used to project content without distortion from the position of the viewer. At the end of the process the image can be projected with corrected perspective.

Perspective corrected image projected

Our _research included a Python implementation of structured light calibration as well as a projector/camera simulator which was used to create the images in this post.

This project implemented the academic paper:

-

Multi-projectors for Arbitrary Surfaces Without Explicit Calibration nor Reconstruction by J.-P. Tardif et al.