HDR // Camera Response Recovery

Today high-dynamic-range imaging (HDRI) is ubiquitous. Many smartphones contain built-in support for HDR and some even default to using HDR to capture images.

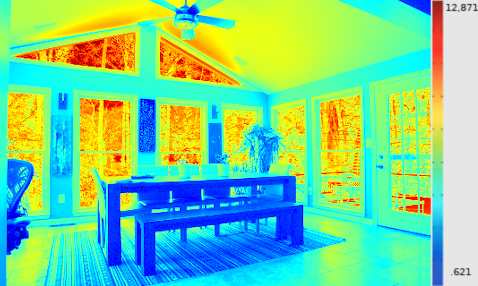

At a basic level, working with HDR data is all about capturing the radiance of the scene. A naive thought would be that pixels appearing twice as bright in an image are from areas in the photographed scene with twice as much radiance.

The psuedo color image shows this is not the case. A tremendous range of radiance values have to be mapped to a significantly smaller range of pixel intensities.

The specific non-linear response function of an individual camera is not usually published by camera makers who consider it a trade secret.

Recovering this function allows more than just the the common "HDR look" (and the hyper-realistic tone-mapped style), including being able to achieve more realistic motion-blur, color correction and edge detection in images.

With a stack of aligned images at different exposures (such as in the last figure in this post) the non-linear response function can be recovered with the following formula:

$$Z_{ij} = f(E\Delta t)$$

Where $i$ indexes over pixel locations and $j$ indexes over the different equations.

With some manipulation this simplifies to:

$$ g(Z_{ij}) = \ln E_i + \ln \Delta t_j $$ Where $g = \ln f^{-1}$

Written in this form $g$ (and therefore eventually $f$) can be recovered up to a scale by using the $SVD$ to minimize a related objective function.

With this function recovered the radiance can be visualized a number of ways (usually by scaling the radiance to the display device).

This project implements the academic papers:

-

Recovering High Dynamic Range Radiance Maps from Photographs by Paul E. Debevec and Jitendra M. Malik

-

Determining the camera response from images: what is knowable? by M.D. Grossberg and S.K. Nayar

-

Modeling the space of camera response functions by M.D. Grossberg and S.K. Nayar

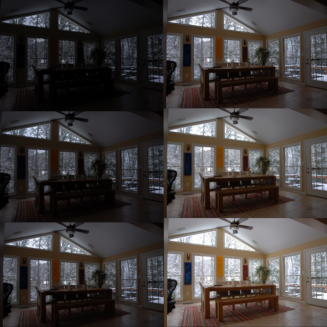

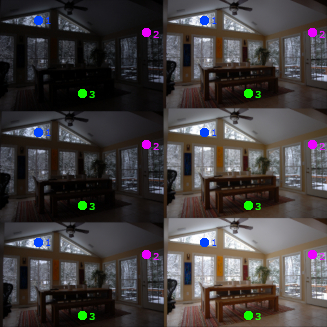

Input images

HDR Result image

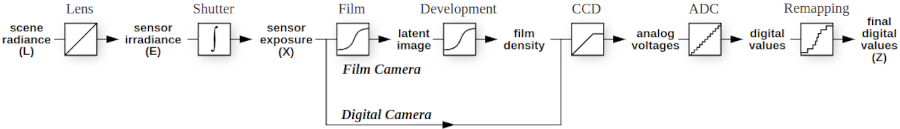

Image capture pipeline

False color image showing radiance levels

HDR input images showing example corresponding keypoints